As long as we do not understand the problems created by implementing and sustaining silo driven solutions, we are going to face a lot of data redundancy, data inaccuracy and erroneous decision making systems. It is obvious that data needs to be holistic in its relationship, in order to achieve accuracy in everything around data, be it machine learning, decision systems or automation.

In order to fix these silo problems and save on investments already made, our primary step would be to design a data architecture that incorporates a relationship map between data attributes . This would take data isolation and redundancy out of scope, and allow the system to scale , even when we collect new data attributes in the future

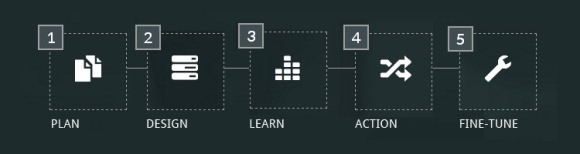

Such a data architecture, can allow us to employ finite state automation and deploy automation processes over your existing data infrastructure . However complex might be the big data environment, deploying the unified architecture can simplify the way data is stored and synthesized. We employ the following 5 steps to turn your data chaos into a structured and intelligent utility

- Understanding your data ecosystem and its relationship

The first step would encompass creating a detailed list of all available data sources and documenting the data attributes, functions and business flows. This acts as the Mise en place for creating the unified design. - Design the data schema with hierarchies and relationship

With the bucket list of all data attributes and its relational occurrences, we can setup the data hierarchy, state tags and node level rule table. On creation of the final design , a simple data parser can tag data in your warehouse, as per the design specs - Machine learning to learn state change from data patterns

Now the data is ready for analysis, holistic pattern detection and learning. Using supervised learning techniques, data can be synthesized to understand and choose optimal patterns that satisfies a given flow and extract possible rules and sub states, to incorporate in the rule library. - Enable automation rules and triggers.

Now that the machine can achieve a state of decision, we can now configure intermediary rules for automation to perform based on various sub states. Using the real-time close-loop data automation, data is scored and staged to pertinent states, enabling rule based state automation - Fine tune

Now that the automation framework is ready for your data, we can now allow it to run on sample data and fine tune all rules and weight distribution. On a successful test run, we can deploy it on a parallel environment to understand the effects of its automation in real business scenarios

With these 5 steps, the data environment would be ready to learn and perform based on supervised learning techniques and bring about a substantial positive change in terms of effectiveness, growth, costs and time to action.